Have you encountered situation when an object is out of the camera frustum, but still has been rendered? Most likely it’s caused by object’s bounds which may take much more space than object itself 🙂 .

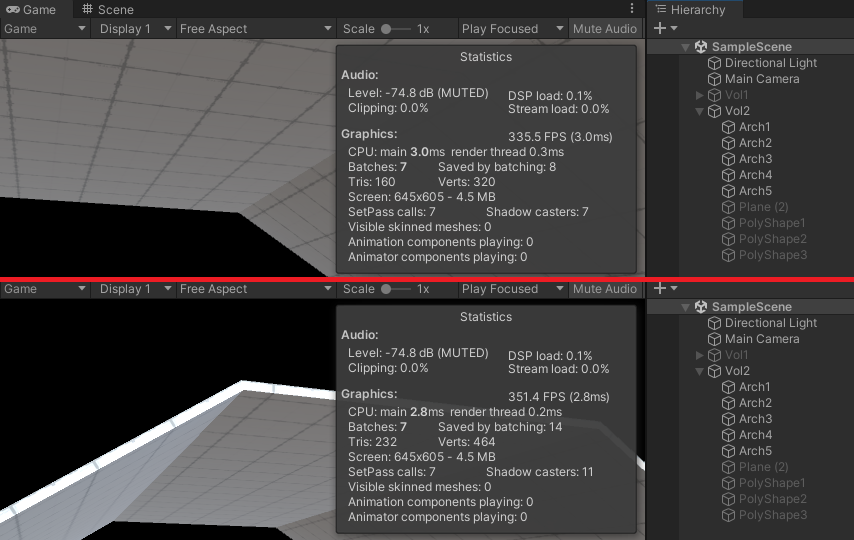

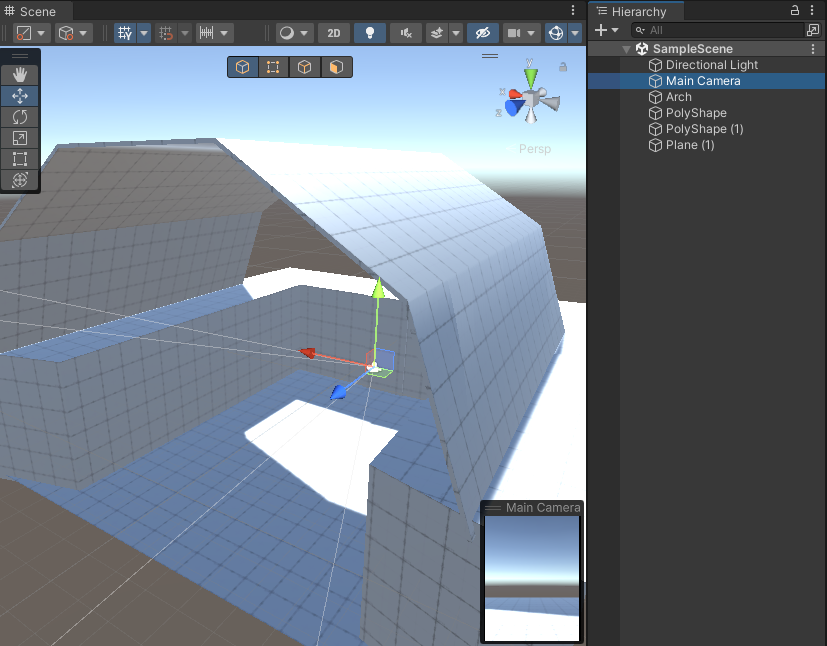

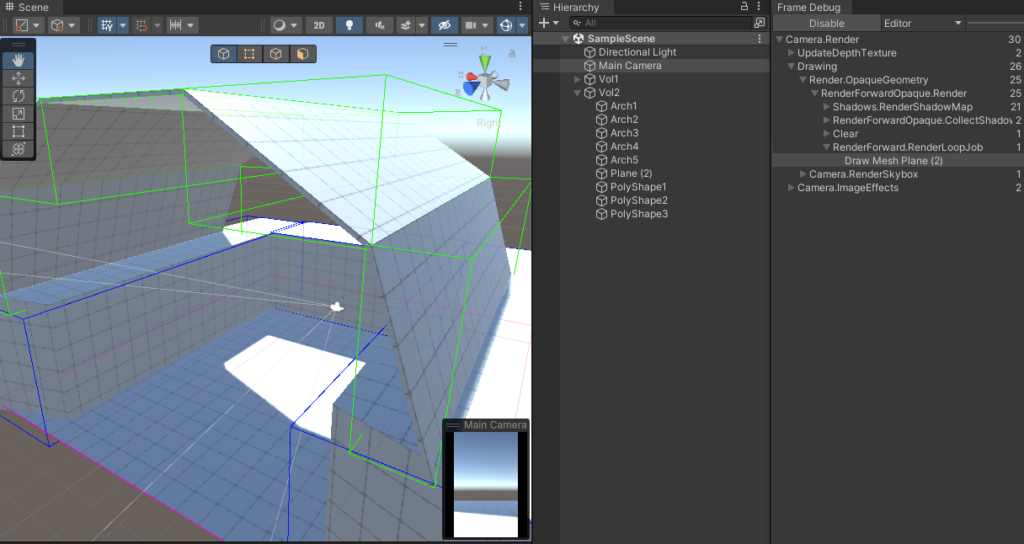

Let’s imagine that we have a simple scene with a few 3d objects and camera:

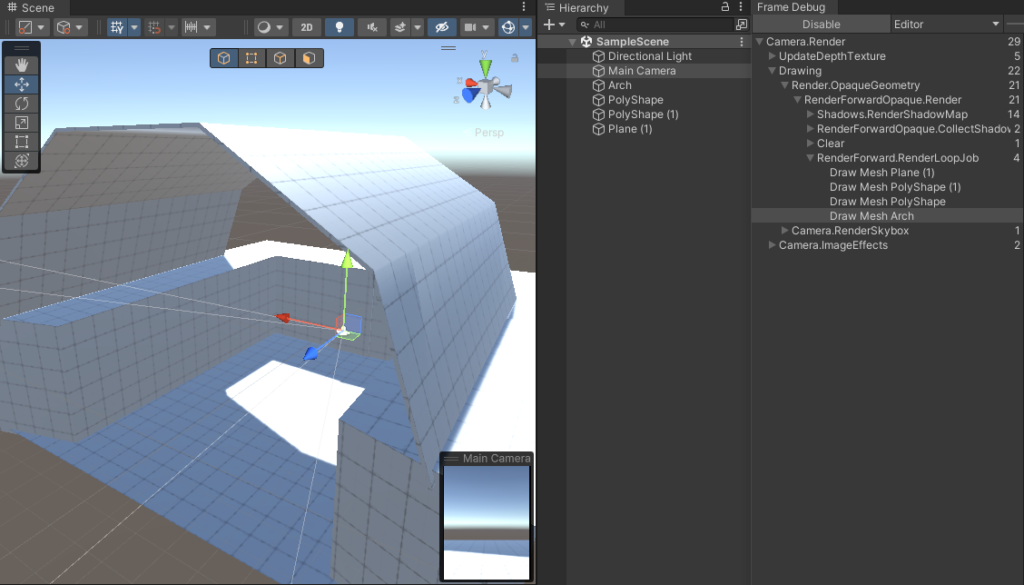

If we use frame debugger to check how many objects are processed, we will see that all of them have been rendered.

It’s caused by bounds of objects overlapping with camera frustum. How can we check them? We can use a simple script which draws gizmos representing renderer bounds.

using UnityEngine;

[ExecuteInEditMode]

public sealed class BoundingBoxView : MonoBehaviour

{

[SerializeField]

private Renderer rendererComponent;

[SerializeField]

private Color boxColor = Color.red;

[SerializeField]

private Color selectedBoxColor = Color.green;

private void Awake()

{

if (rendererComponent == null)

{

rendererComponent = GetComponent<Renderer>();

}

}

private void OnDrawGizmos()

{

if (rendererComponent != null)

{

var bounds = rendererComponent.bounds;

var gizmosColor = Gizmos.color;

Gizmos.color = boxColor;

Gizmos.DrawWireCube(bounds.center, bounds.size);

Gizmos.color = gizmosColor;

}

}

private void OnDrawGizmosSelected()

{

if (rendererComponent != null)

{

var bounds = rendererComponent.bounds;

var gizmosColor = Gizmos.color;

Gizmos.color = selectedBoxColor;

Gizmos.DrawWireCube(bounds.center, bounds.size);

Gizmos.color = gizmosColor;

}

}

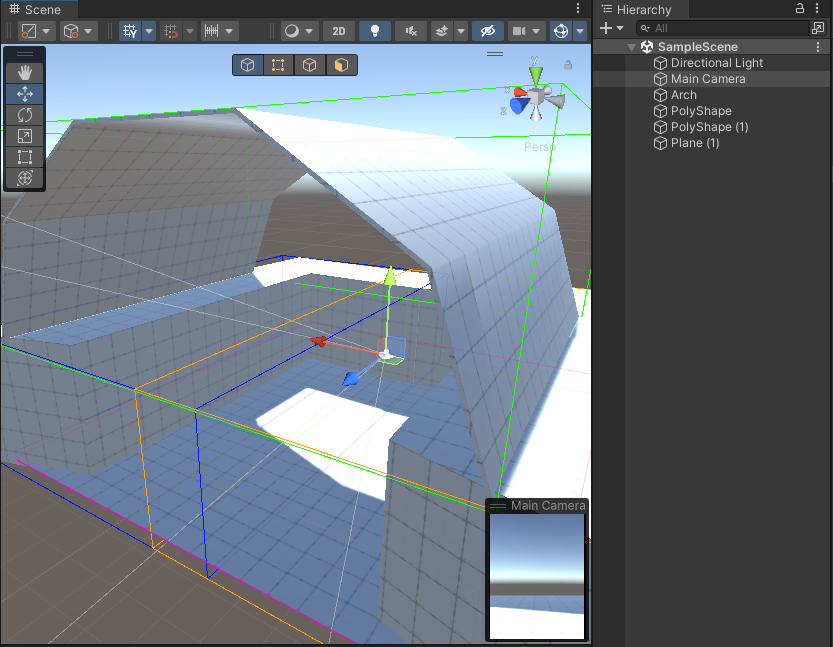

}When we add BoundingBoxView script to all 3d objects on the scene and set different color of gizmo for each one we will see something like in the Image 3.

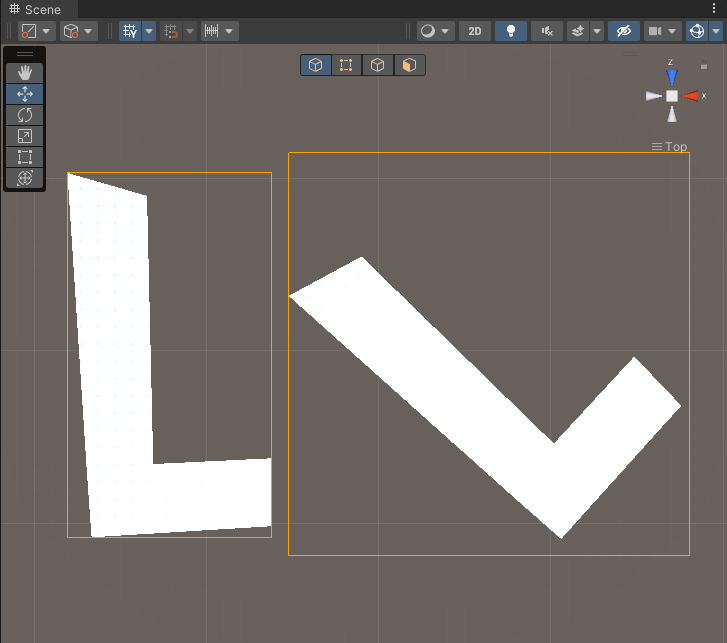

As we can see all gizmos are overlapping with camera frustum. This is the reason why all objects on the scene have been rendered. Can we optimize the scene anyhow? Objects can be divided into smaller pieces what will cause that each part will have separate and smaller bounding box.

Bounding boxes are calculated in relation to the world space. It means that edges of the box enclosing our 3d object are axis-aligned to world axis. For this reason the bounding box can differ depends on rotation of an object. It’s important if your 3d scene is not axis-aligned i.e. big objects are inclined to the world axis. You should have it in the mind when you split your 3d objects to smaller pieces during scene optimization.

At the end let’s imagine that your 3d scene is built from static objects and you have baked lightmaps. Everything has been batched into one big combined mesh by static batching and is displayed in one draw call. Is it worth to split objects into smaller pieces? We won’t save draw calls, because pieces still will be combined and rendered at once.

Good News 🙂 ! It’s still worth to split objects into smaller parts. We will limit number of vertexes processed by GPU, because combined mesh is built most likely from submeshes which can be enabled/disabled separately. Unity will cull parts which don’t need to be rendered what will simplify processed geometry.